neural networks - Explanation of Spikes in training loss vs. iterations with Adam Optimizer - Cross Validated

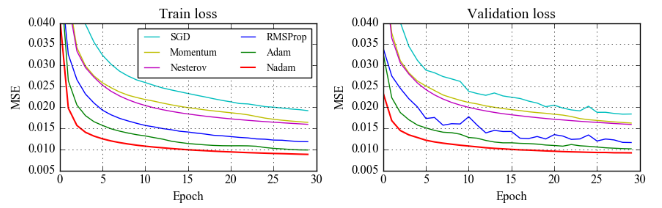

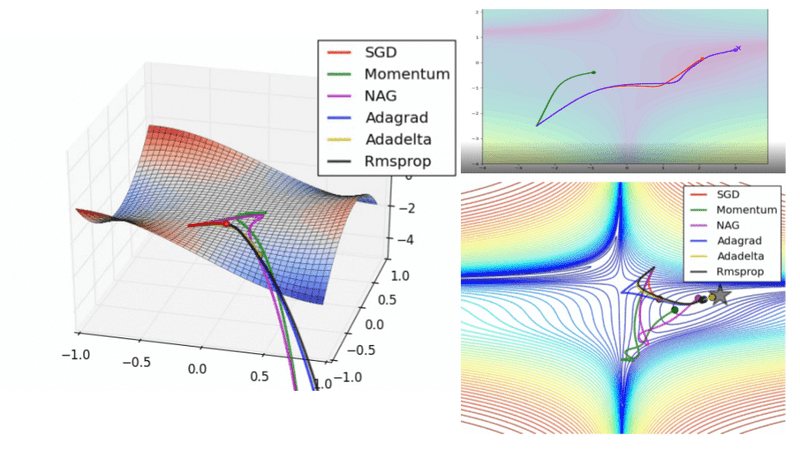

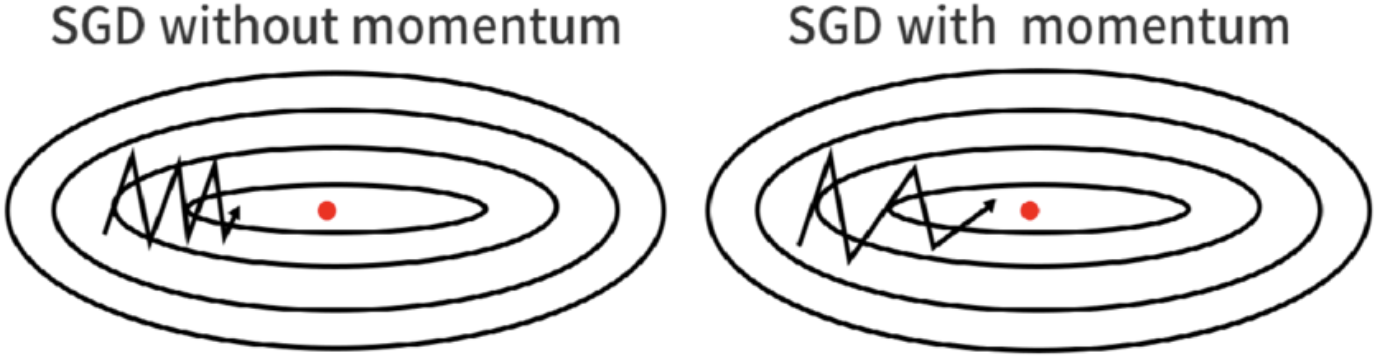

From SGD to Adam. Gradient Descent is the most famous… | by Gaurav Singh | Blueqat (blueqat Inc. / former MDR Inc.) | Medium

Assessing Generalization of SGD via Disagreement – Machine Learning Blog | ML@CMU | Carnegie Mellon University

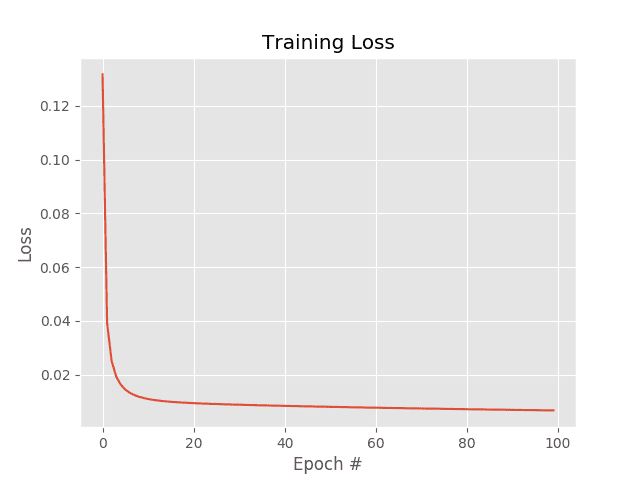

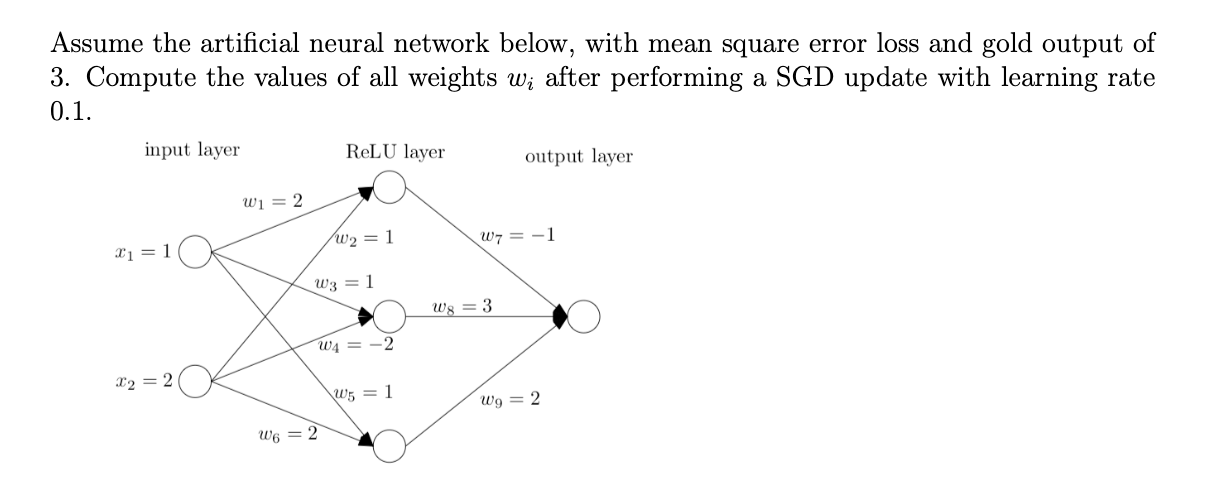

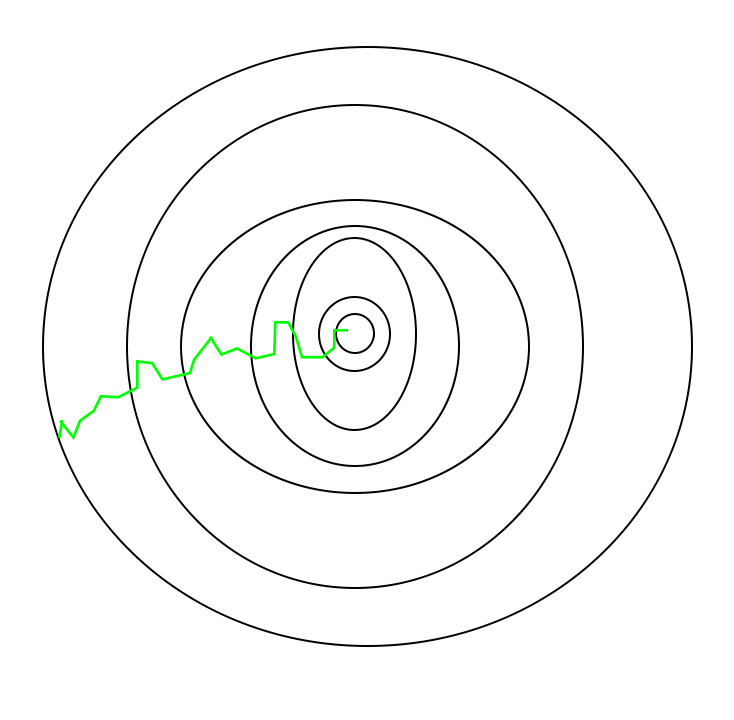

An Introduction To Gradient Descent and Backpropagation In Machine Learning Algorithms | by Richmond Alake | Towards Data Science

Chengcheng Wan, Shan Lu, Michael Maire, Henry Hoffmann · Orthogonalized SGD and Nested Architectures for Anytime Neural Networks · SlidesLive

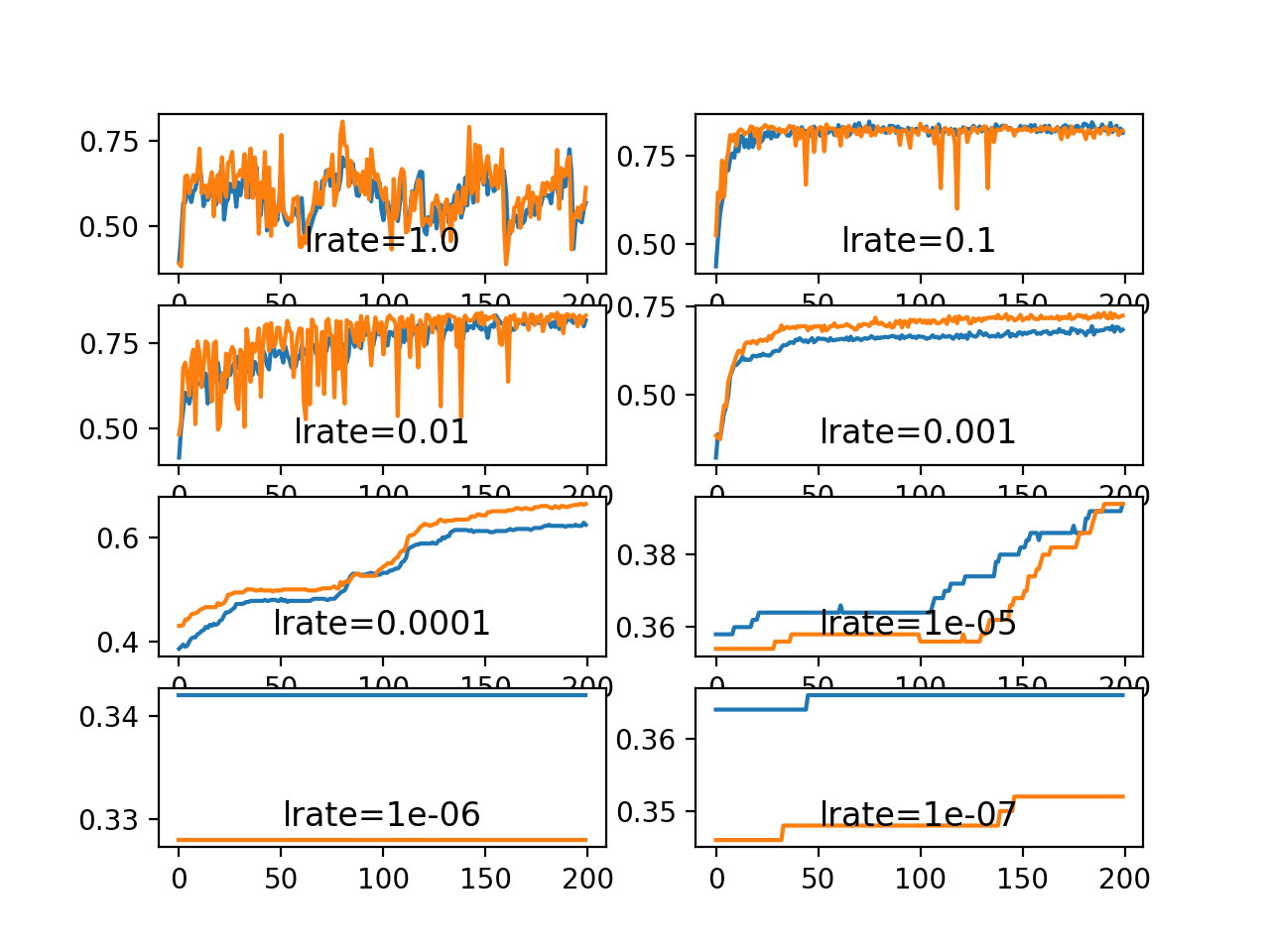

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

A (Quick) Guide to Neural Network Optimizers with Applications in Keras | by Andre Ye | Towards Data Science

Hessians - A tool for debugging neural network optimization – Rohan Varma – Software Engineer @ Facebook